|

I'm a research scientist at Google DeepMind in San Francisco, where I lead the GenMedia 3D team. I did my PhD at Stanford University advised by Surya Ganguli in the Neural Dynamics and Computation lab. My thesis was on computational tools to develop a better understanding of both biological and artificial neural networks. I did my undergrad at Carnegie Mellon University, where I was advised by Tai Sing Lee. I've worked at Google DeepMind, Google Brain, Intel Research Pittsburgh, and the NYU Center for Neural Science. |

|

|

I'm interested in machine learning, computational neuroscience, information theory, computer vision, optimization, and cycling. My current focus is on generative models to understand and create 3D worlds. |

|

|

The Veo team and collaborators at Google DeepMind flow / project page

|

|

Rundi Wu, Ruiqi Gao, Ben Poole, Alex Trevithick, Changxi Zheng, Jonathan T. Barron, Aleksander Holynski CVPR 2025 (Oral Presentation) project page / arXiv Turn videos into dynamic 3D scenes that you can move through in real-time. |

|

Alex Trevithick, Roni Paiss, Philipp Henzler, Dor Verbin, Rundi Wu, Hadi Alzayer, Ruiqi Gao, Ben Poole, Jonathan T. Barron, Aleksander Holynski, Ravi Ramamoorthi, Pratul P. Srinivasan CVPR 2025 project page / arXiv Turn inconsistent captures into consistent multiview images |

|

Ruiqi Gao*, Aleksander Holynski*, Philipp Henzler, Arthur Brussee, Ricardo Martin Brualla, Pratul P. Srinivasan, Jonathan T. Barron, Ben Poole* NeurIPS 2024 (Oral Presentation) project page / arXiv CAT3D uses a multi-view diffusion model to generate novel views, and just inputs these to NeRF/3DGS. Create anything in 3D in 1 minute! |

|

Sirui Xie, Zhisheng Xiao, Durk Kingma, Tingbo Hou, Ying Nian Wu, Kevin Murphy, Tim Salimans, Ben Poole, Ruiqi Gao NeurIPS 2024 arXiv Distill a diffusion model into a one-step generator using EM. |

|

|

Siddhant Jain*, Daniel Watson*, Eric Tabellion*, Aleksander Holynski, Ben Poole, Janne Kontkanen CVPR 2024 project page / arXiv Fast and efficient video interpolation with cascaded diffusion models. |

|

|

Rundi Wu*, Ben Mildenhall*, Keunhong Park, Philipp Henzler, Ruiqi Gao, Daniel Watson, Dor Verbin, Pratul Srinivasan, Jonathan T. Barron, Ben Poole, Aleksander Holynski* CVPR 2024 project page / arXiv 3D reconstruction of real-world scenes from only a few photos |

|

Dave Epstein, Allan Jabri, Ben Poole, Alexei A. Efros, Aleksander Holynski NeurIPS 2023 project page / arXiv Self-guidance is a method for controllable image generation that guides sampling using only the attention and activations of a pretrained diffusion model. |

|

Amit Raj, Srinivas Kaza, Ben Poole, Michael Niemeyer, Nataniel Ruiz, Ben Mildenhall, Shiran Zada, Kfir Aberman, Michael Rubinstein, Jonathan T. Barron, Yuanzhen Li, Varun Jampani ICCV 2023 project page / arXiv Combining DreamBooth (personalized text-to-image) and DreamFusion (text-to-3D) yields high-quality, subject-specific 3D assets with text-driven modifications |

|

Guandao Yang, Abhijit Kundu, Leonidas Guibas, Jonathan Barron, Ben Poole ICLR Neural Fields Workshop 2023 Learn a regularized set of NeRFs in parallel, then learn a 3D diffusion model that can generate new NeRFs. |

|

Ben Poole, Ajay Jain, Jonathan T. Barron, Ben Mildenhall ICLR 2023 (Outsanding Paper Award) project page / arXiv / gallery We optimize a NeRF from scratch using a pretrained text-to-image diffusion model to do text-to-3D generative modeling. |

|

A general framework for training and sampling from score-based models enabling likelihood computation and controllable generaiton. |

|

Ajay Jain*, Ben Poole* NeurIPS 2022 Score-Based Models Workshop project page / paper Sometimes bugs are effective MCMC samplers for score-based models. |

|

Ajay Jain, Ben Mildenhall, Jonathan T. Barron, Pieter Abbeel, Ben Poole CVPR 2022 project page / arXiv / video Supervising the CLIP embeddings of NeRF renderings lets you to generate 3D objects from text prompts. |

|

A new model class for discrete variables encompassing order agnostic autoregressive models and absorbing discrete diffusion. |

|

A general purpose learned optimizer. |

|

SOTA likelihood using diffusion models with learnable noise schedule |

|

A general framework for training and sampling from score-based models enabling likelihood computation and controllable generaiton. |

|

Tractably learn and sample from a sequence of EBMs based on a diffusion process. High sample quality and stable long-run MCMC chains. |

|

|

|

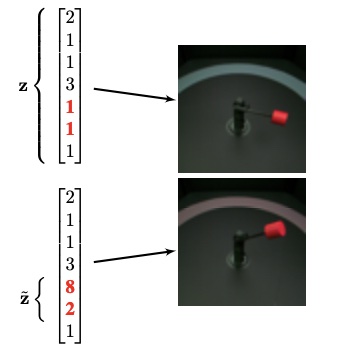

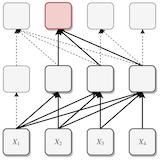

With a causality-inspired twist, disentangled representations are identifiable in theory and practice. |

|

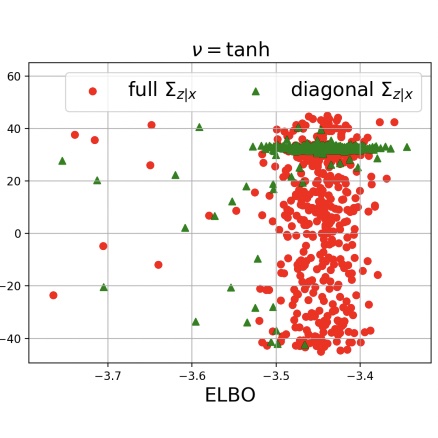

Heuristics in VAEs can lead to uniqueness and beneficial regularization. |

|

The Variational Information Bottleneck can rederived as Half-Bayesian. |

|

Old, new, and improved estimators of mutual information w/neural nets. |

|

Fast sampling generative models for discrete data. |

|

Avoid posterior collapse by lower bounding the rate. |

|

|

|

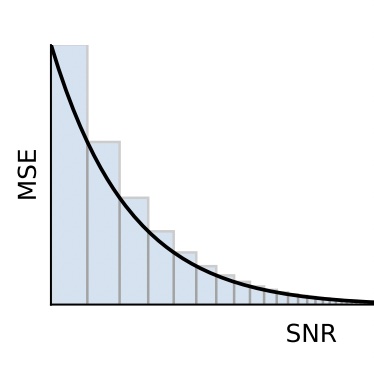

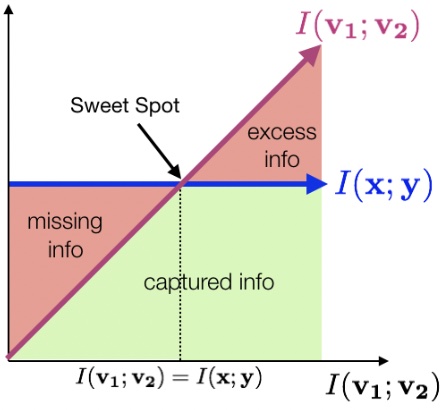

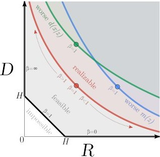

Understanding tradeoffs in VAE models through the lens of information theory. |

|

Continuous relaxation training of discrete latent-variable models can flexibly capture both continuous and discrete aspects of natural data. |

|

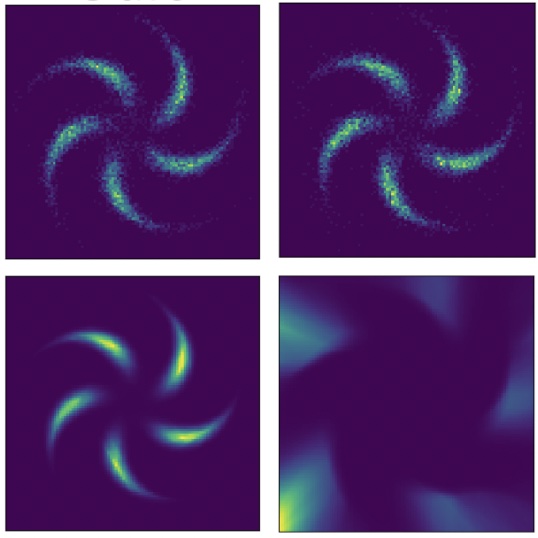

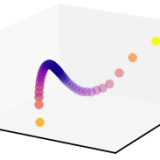

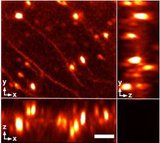

Large-scale cellular-level imaging in the mammalian brain using lightfield microscopy. 1x1x0.5mm3 @ 100Hz. |

|

Learns to solve tasks sequentially without forgetting by learning which weights are important. |

|

Random neural networks show exponential growth in activation patterns and more. |

|

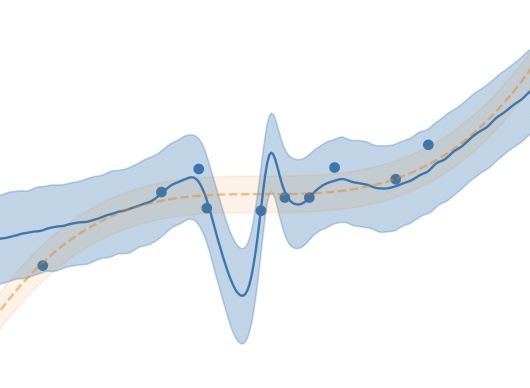

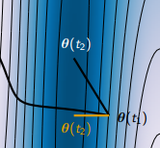

Extends dimensionality reduction techniques to account for trial-to-trial variability in timing. |

|

Efficient gradient estimator for categorical variables. |

|

Stabilize GANs by defining the generator objective with respect to an unrolled optimization of the discriminator. |

|

Jointly learn a generative model and an inference network through an adversarial process. |

|

Random neural networks have curvature that grows exponentially with depth. |

|

A new take on the Generative Adversarial Network training procedure. |

|

Fruit flies detect motion using a very similar algorithm to humans. |

|

Fast and accurate edge-aware smoothing. Differentiable for all your deep learning needs. |

|

Speed up quasi-newton methods by maintaining a low-dimensional approximation of the Hessian for each minibatch. |

|

Derives analytic regularizers for different forms of noise injection, and shows how alternative types of additive noise can improve over dropout. |

|

Complex tasks, like the Space Fortress video game, can be decomposed into a set of independent reusable components. |

|

|

|

Correct for translations and rotations of noisy volumetric data without a clean reference volume. |

|

|

|

|